Via

9.28.22

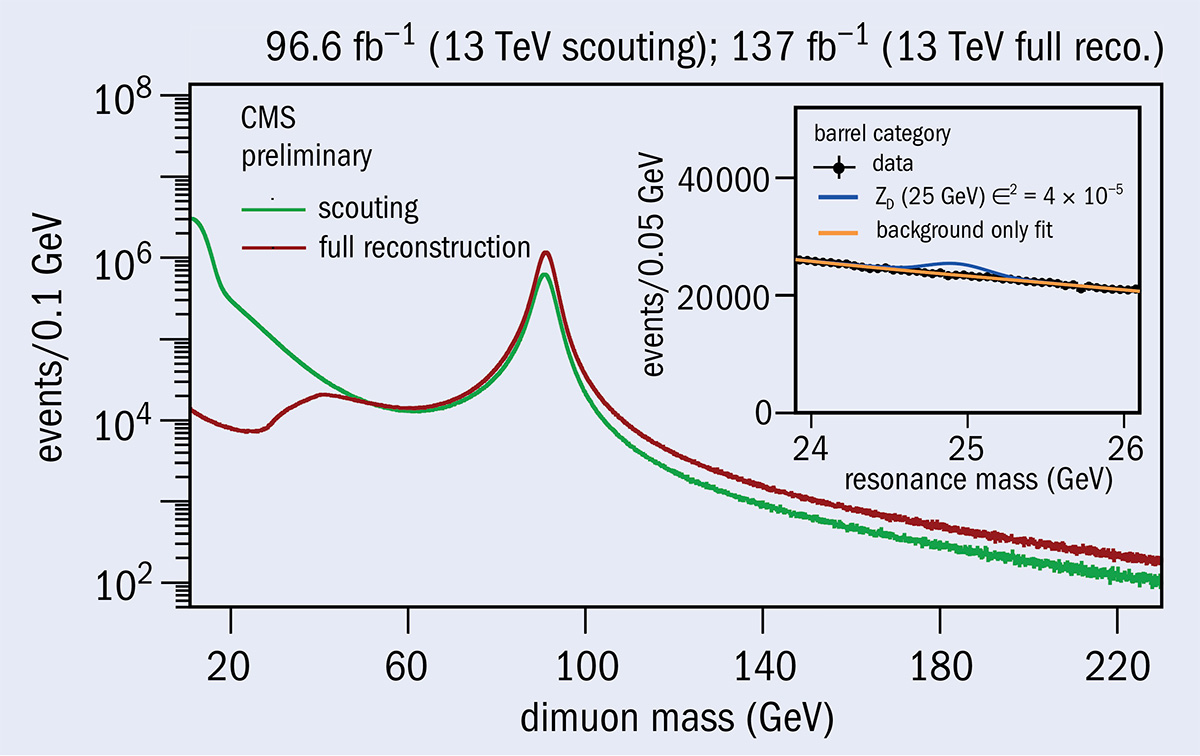

Fig. 1

Schematic representation of the right-handed Cartesian coordinate system adopted to describe the detector. Credit: The European Physical Journal C (2022).

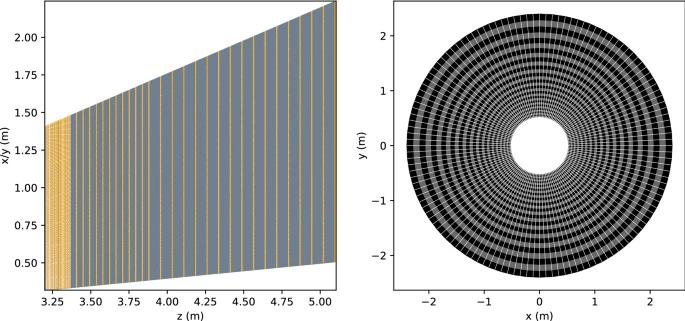

Fig. 2

Left: schematic representation of the detector longitudinal sampling structure. Right: transverse view of the last active layer. Different colors represent different materials: copper (orange), stainless steel and lead (gray), air (white) and active sensors made of silicon (black)

There are more instructive images in the science paper.

A team of researchers from CERN, Massachusetts Institute of Technology, and Staffordshire University have implemented a new algorithm for reconstructing particles at the Large Hadron Collider.

The Large Hadron Collider (LHC) is the most powerful particle accelerator ever built which sits in a tunnel 100 meters underground at CERN, the European Organization for Nuclear Research, near Geneva in Switzerland. It is the site of long-running experiments which enable physicists worldwide to learn more about the nature of the universe.

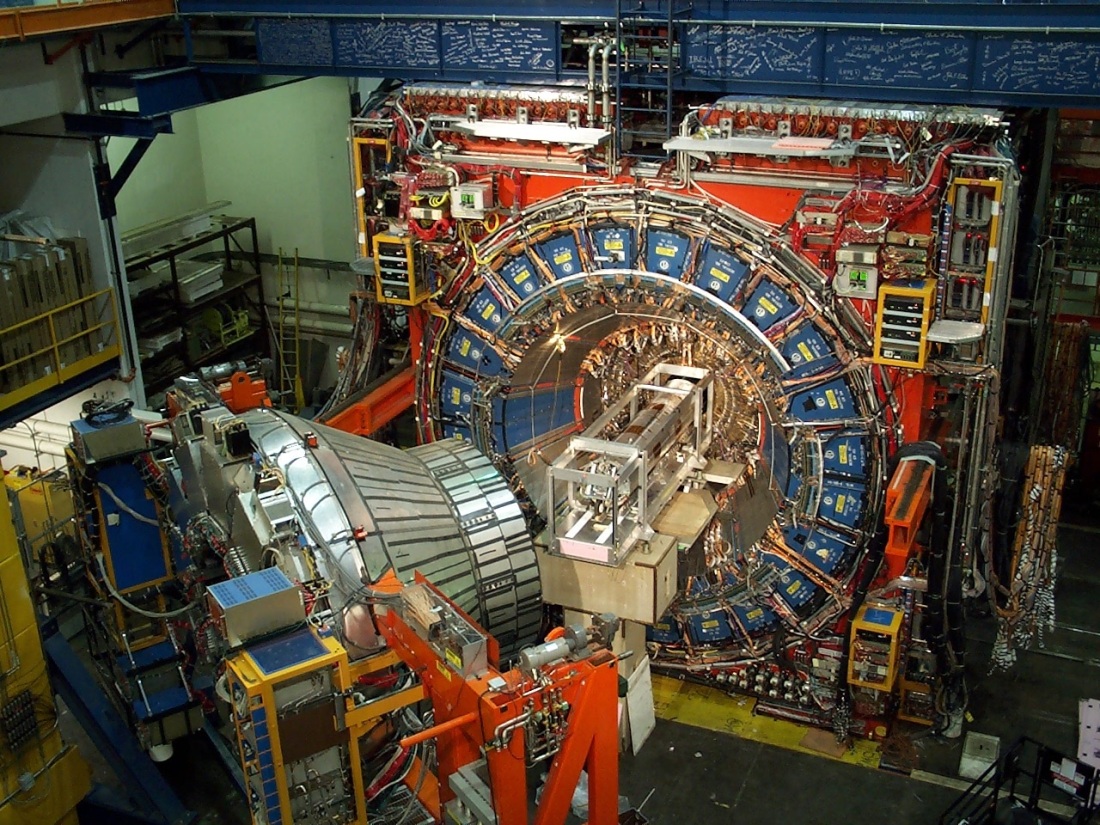

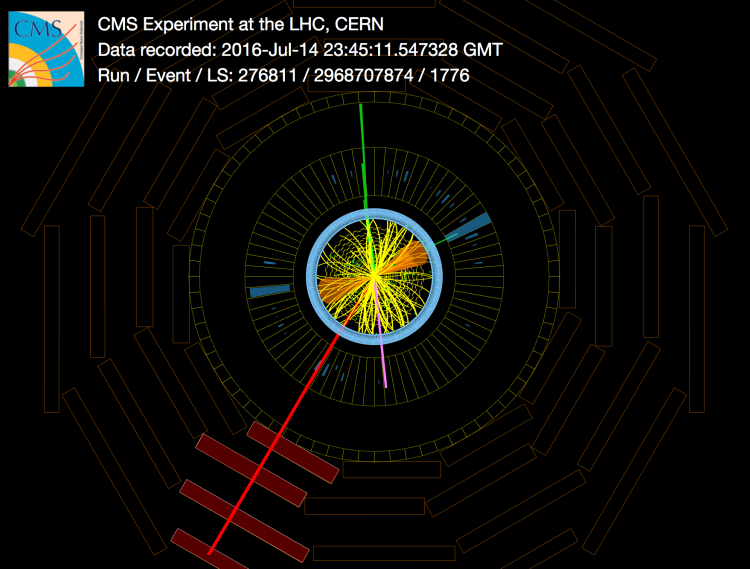

The project is part of the Compact Muon Solenoid (CMS) experiment [below] —one of seven installed experiments which uses detectors to analyze the particles produced by collisions in the accelerator.

The subject of a new academic paper published in European Physical Journal C [below], the project has been carried out ahead of the high luminosity upgrade of the Large Hadron Collider.

The High Luminosity Large Hadron Collider (HL-LHC) project aims to crank up the performance of the LHC in order to increase the potential for discoveries after 2029. The HL-LHC will increase the number of proton-proton interactions in an event from 40 to 200.

Professor Raheel Nawaz, Pro Vice-Chancellor for Digital Transformation, at Staffordshire University, has supervised the research. He explained that “limiting the increase of computing resource consumption at large pileups is a necessary step for the success of the HL-LHC physics program and we are advocating the use of modern machine learning techniques to perform particle reconstruction as a possible solution to this problem.”

He added that “this project has been both a joy and a privilege to work on and is likely to dictate the future direction of research on particle reconstruction by using a more advanced AI-based solution.”

Dr. Jan Kieseler from the Experimental Physics Department at CERN added that “this is the first single-shot reconstruction of about 1,000 particles from and in an unprecedentedly challenging environment with 200 simultaneous interactions each proton-proton collision. Showing that this novel approach, combining dedicated graph neural network layers (GravNet) and training methods (Object Condensation), can be extended to such challenging tasks while staying within resource constraints represents an important milestone towards future particle reconstruction.”

Shah Rukh Qasim, leading this project as part of his Ph.D. at CERN and Manchester Metropolitan University, says that “the amount of progress we have made on this project in the last three years is truly remarkable. It was hard to imagine we would reach this milestone when we started.”

Professor Martin Jones, Vice-Chancellor and Chief Executive at Staffordshire University, added that “CERN is one of the world’s most respected centers for scientific research and I congratulate the researchers on this project which is effectively paving the way for even greater discoveries in years to come.”

“Artificial Intelligence is continuously evolving to benefit many different industries and to know that academics at Staffordshire University and elsewhere are contributing to the research behind such advancements is both exciting and significant.”

Science paper:

European Physical Journal C

See the full article here.

five-ways-keep-your-child-safe-school-shootings

Please help promote STEM in your local schools.

![]()

Stem Education Coalition

Meet CERN in a variety of places:

THE FOUR MAJOR PROJECT COLLABORATIONS

ATLAS

ALICE

CMS

LHCb

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] map.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] map.

3D cut of the LHC dipole CERN LHC underground tunnel and tube.

3D cut of the LHC dipole CERN LHC underground tunnel and tube.

The LHC magnets surround the beampipe along its 27 km circumference- Image CERN

OTHER PROJECTS AT CERN

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] AEGIS.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ALPHA Antimatter Factory.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ALPHA Antimatter Factory.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ALPHA-g Detector.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ALPHA-g Detector.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] AMS.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] AMS.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ASACUSA.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ASACUSA.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear] [ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ATRAP.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear] [ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ATRAP.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] Antiproton Decelerator.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] Antiproton Decelerator.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] BASE: Baryon Antibaryon Symmetry Experiment.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] BASE: Baryon Antibaryon Symmetry Experiment.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] BASE instrument.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] BASE instrument.

The European Organization for Nuclear Research [Organización Europea para la Investigación Nuclear][Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH) [CERN] [CERN] BASE: Baryon Antibaryon Symmetry Experiment.

The European Organization for Nuclear Research [Organización Europea para la Investigación Nuclear][Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH) [CERN] [CERN] BASE: Baryon Antibaryon Symmetry Experiment.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] CAST Axion Solar Telescope.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] CAST Axion Solar Telescope.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] CLOUD.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] CLOUD.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] COMPASS.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] COMPASS.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] CRIS experiment.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] CRIS experiment.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] DIRAC.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] DIRAC.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] FASER experiment schematic.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] FASER experiment schematic.

CERN FASER is designed to study the interactions of high-energy neutrinos and search for new as-yet-undiscovered light and weakly interacting particles. Such particles are dominantly produced along the beam collision axis and may be long-lived particles, travelling hundreds of metres before decaying. The existence of such new particles is predicted by many models beyond the Standard Model that attempt to solve some of the biggest puzzles in physics, such as the nature of dark matter and the origin of neutrino masses.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] GBAR.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] GBAR.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ISOLDE Looking down into the ISOLDE experimental hall.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] ISOLDE Looking down into the ISOLDE experimental hall.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] LHCf.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] LHCf.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] The MoEDAL experiment- a new light on the high-energy frontier.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] The MoEDAL experiment- a new light on the high-energy frontier.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] NA62.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] NA62.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] NA64.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] NA64.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] n_TOF.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] n_TOF.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] TOTEM.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] TOTEM.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] UA9.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] UA9.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] The SPS’s new RF system.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] The SPS’s new RF system.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] Proto Dune.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] Proto Dune.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] HiRadMat-High Radiation to Materials.

The European Organization for Nuclear Research [La Organización Europea para la Investigación Nuclear][ Organization européenne pour la recherche nucléaire] [Europäische Organization für Kernforschung](CH)[CERN] HiRadMat-High Radiation to Materials.

The SND@LHC experiment consists of an emulsion/tungsten target for neutrinos (yellow) interleaved with electronic tracking devices (grey), followed downstream by a detector (brown) to identify muons and measure the energy of the neutrinos. (Image: Antonio Crupano/SND@LHC)