And

10.5.23 [Just today in social media.]

Sam Jarman

A family of organic compounds released by trees could play a far greater role in cloud formation than previously thought. That is the conclusion of Lubna Dada at Switzerland’s Paul Scherrer Institute and an international team, who say that their insights could play a crucial role in predicting the future of Earth’s climate.

When trees come under stress, they release organic molecules that react with ozone, nitrate radicals and other compounds in the atmosphere. These reactions create tiny solid particles called ultra-low-volatility organic compounds (ULVOCs).

In some cases, ULVOCs can grow large enough for water droplets to condense on their surfaces, encouraging cloud formation. Clouds have significant effects on Earth’s climate – many of which are poorly understood. Therefore, understanding the influence of ULVOCs cannot be overlooked in global climate models.

The most important molecules involved in ULVOC formation are in three types of hydrocarbon called isoprene, monoterpene and sesquiterpene. To complicate matters, scientists believe climate change is now altering their emission into the atmosphere.

Cloudy at CERN

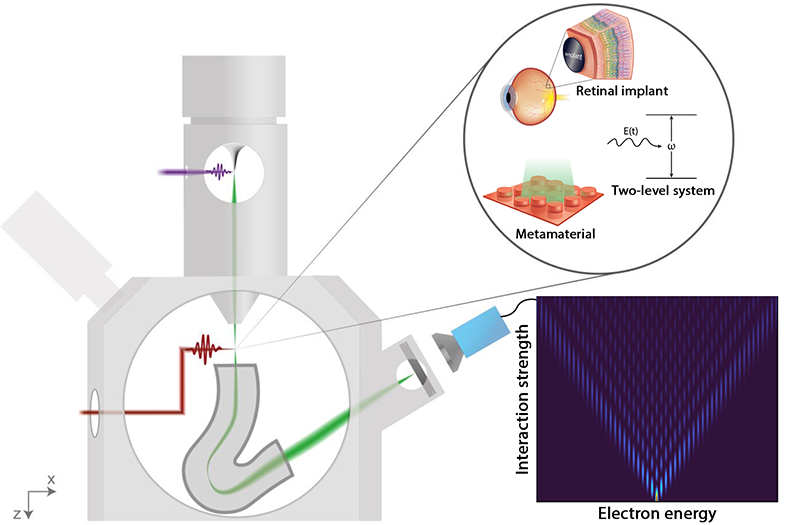

In the latest study, Dada’s team explored the ability of sesquiterpenes to form ULVOCs using the Cosmics Leaving Outdoor Droplets (CLOUD) chamber at CERN in Geneva.

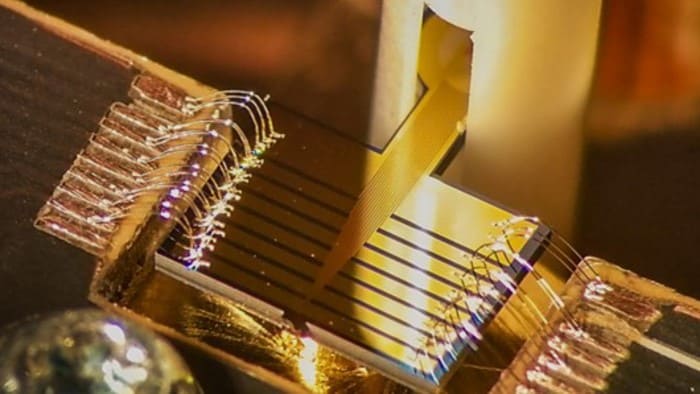

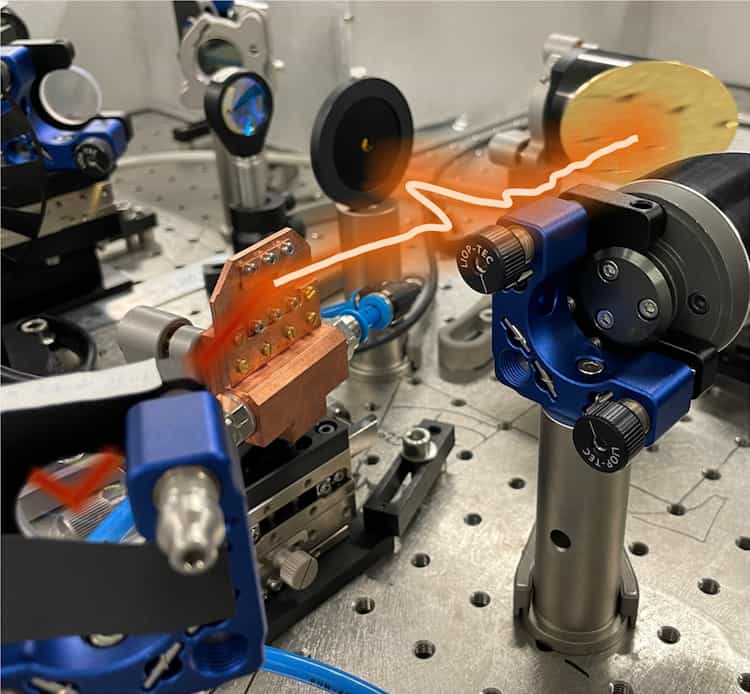

Could there be a link between galactic cosmic rays and cloud formation? An experiment at CERN is using the cleanest box in the world to find out. Credit: CERN.

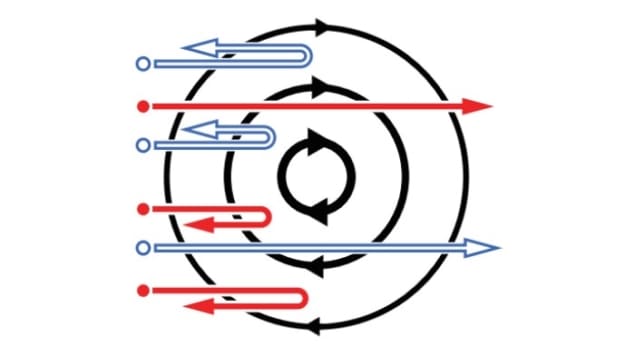

The “Cosmics Leaving Outdoor Droplets” (CLOUD) experiment uses a special cloud chamber to study the possible link between galactic cosmic rays and cloud formation. Based at the Proton Synchrotron (PS) at CERN, this is the first time a high-energy physics accelerator has been used to study atmospheric and climate science.

The results should contribute much to our fundamental understanding of aerosols and clouds, and their affect on climate.

There, researchers can simulate different atmospheric conditions that are involved in cloud formation.

“At almost 30 m^3, this sealed climate chamber is the purest of its kind worldwide. It is so pure that it allows us to study sesquiterpenes even at the low concentrations recorded in the atmosphere,” Dada explains.

Starting with a mixture of only isoprene and monoterpene, the team measured how rates of cloud formation changed inside the chamber as the concentration of sesquiterpene was increased. The effect was immediate. Even when sesquiterpene composed just 2% of the mixture inside the CLOUD chamber, its increased yield of ULVOCs had already doubled the cloud formation rate.

As Dada explains, “This can be explained by the fact that a sesquiterpene molecule consists of 15 carbon atoms, while monoterpenes consist of only 10 and isoprenes only five.” With its higher molecular weight, sesquiterpene is far less volatile still than the other two molecules, allowing it to coalesce more readily into solid particles.

The results show that the cloud-forming influence of sesquiterpenes must be included in future global climate models. Dada and colleagues hope that their study will allow climate scientists to make better predictions of how cloud formation and its impact on Earth’s atmosphere will change as the planet continues to heat.

Building on their techniques, the researchers will now aim to gain a broader picture of how the climate has already been impacted by emissions of other artificially created compounds. “Next, we and our CLOUD partners want to investigate what exactly happened during industrialization,” explains team member, Imad El Haddad. “At this time, the natural atmosphere became increasingly mixed with anthropogenic gases such as sulphur dioxide, ammonia and other anthropogenic organic compounds.”

The research is described in Science Advances.

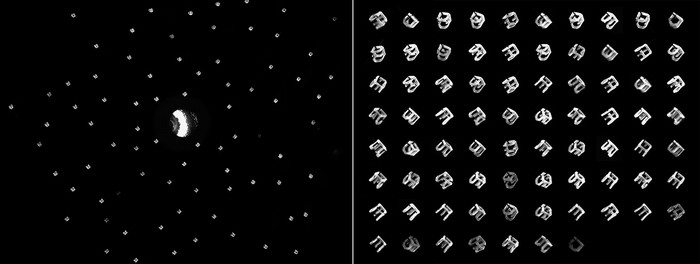

Fig. 1. Example pure biogenic NPF experiment in CLOUD.

Here, we show a representative mixture run with all three BVOCs (β-caryophyllene, α-pinene, and isoprene) in three concentration steps, with an injected ratio 1:6:50 (β-caryophyllene, α-pinene, and isoprene), 40 ppbv (parts per billion by volume) O3, T = +5°C, relative humidity (RH) = 40%, and full UV lamp intensity; runs 12 to 14 (see table S1). (A) The combined particle number size distribution measured using a full suite of particle measuring instruments (see the “Particle counters” section in Materials and Methods). (B) Evolution of precursor vapor measured concentrations, α-pinene, β-caryophyllene, and isoprene. The three experimental stages are separated by vertical gray lines. (C) Evolution of formation rate of particles with diameter >1.7 nm (J1.7) and condensation sink (CS). (D) Evolution of oxidation product concentrations measured with NO3-CIMS, total OOMs, extremely low volatility organic compounds (ELVOCs), and ultralow volatility organic compounds (ULVOCs).

Sorry, no links to references.

See the full article here .

Comments are invited and will be appreciated, especially if the reader finds any errors which I can correct. Use “Reply” near the bottom of the post.

five-ways-keep-your-child-safe-school-shootings

Please help promote STEM in your local schools.

“http://www.stemedcoalition.org/”>Stem Education Coalition

Meet CERN in a variety of places:

physicsworld is a publication of the Institute of Physics. The Institute of Physics is a leading scientific society. We are a charitable organization with a worldwide membership of more than 50,000, working together to advance physics education, research and application.

We engage with policymakers and the general public to develop awareness and understanding of the value of physics and, through IOP Publishing, we are world leaders in professional scientific communications.