From The Sloan School of Management

At

The Massachusetts Institute of Technology

3.18.24

Meredith Somers

Credit: Andrii Chagovets/iStock | En-ROADS climate simulator

A free, updated simulator allows users to visualize environmental impacts and better guide climate decision-making in their organizations.

Businesses are some of the most powerful institutions in society. Companies influence popular opinion and policies through the products and services they offer, the advertising and communications that reach the public, and the lobbying and financial support they provide to candidates and ballot issues.

Businesses also play an important role in shaping the conversation around climate and sustainability and in determining what actions to take. But while it’s easy to see the devastating effects of climate change today — raging wildfires, massive floods, harsh droughts, and extreme storms — it’s harder to understand the underlying drivers and determine where and how climate actions fit in a corporate strategy. That’s where the En-ROADS global climate solutions simulator can help.

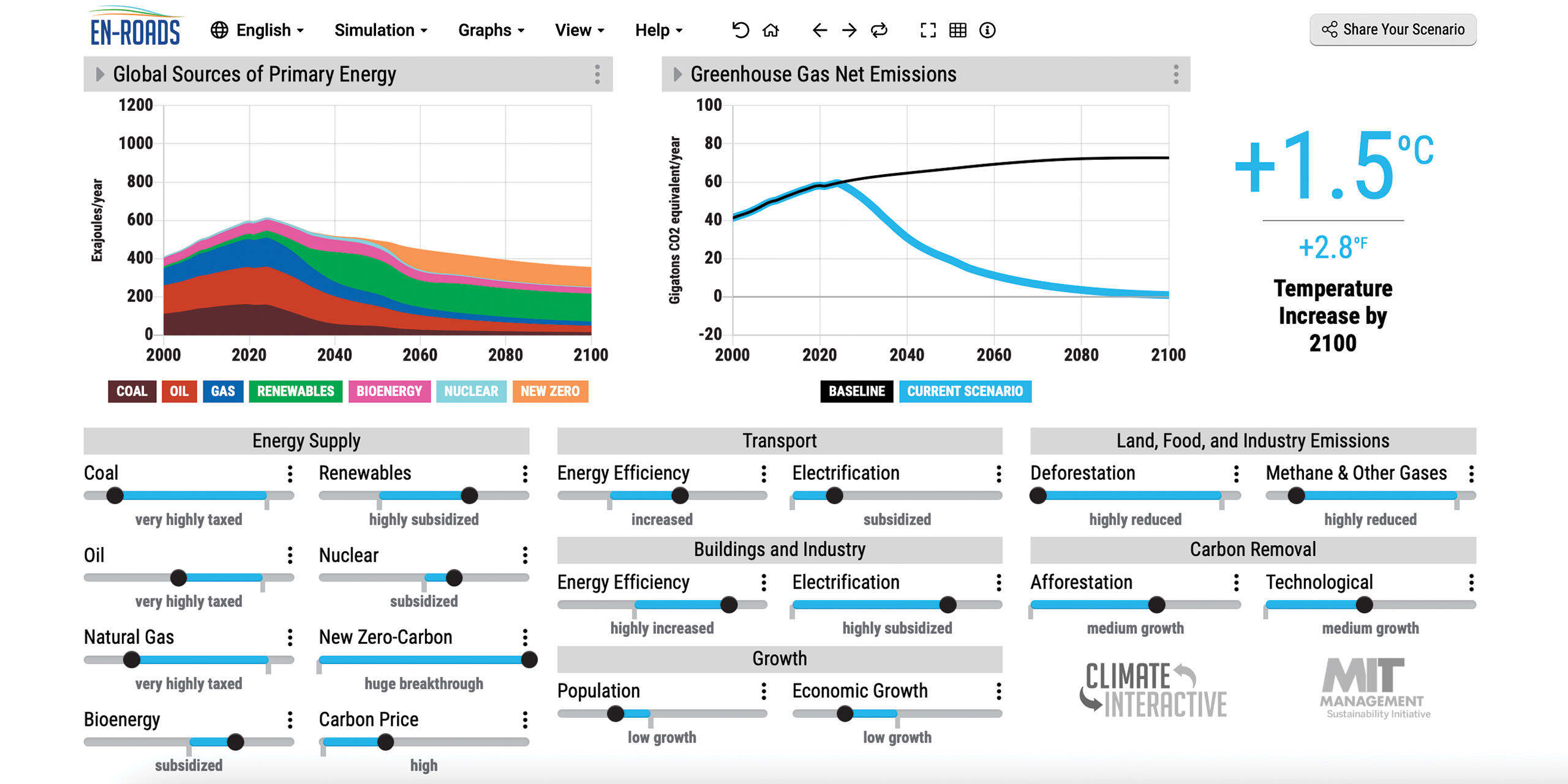

Co-developed by Climate Interactive and the MIT Sloan Sustainability Initiative, the free En-ROADS simulator uses current climate data and modeling to visualize the impact of environmental policies and industry actions — or inactions — through the year 2100. The MIT Climate Pathways Project uses En-ROADS to engage decision makers in government, business, and civil society.

“The benefit of a simulator like this is that people can create their own scenarios based on their business strategy and see how the world would look,” said Bethany Patten, a senior lecturer and director of policy and engagement at the Sustainability Initiative.

“People learn best from experience and experiment. But with climate change, experience comes too late, and experimentation is impossible,” said John Sterman, an MIT Sloan professor of management and faculty co-director of the initiative. “We only have one planet. We can’t run a randomized controlled trial to compare a planet with fossil fuels to one that doesn’t [have them] and see what happens after a few hundred years.”

Instead, the En-ROADS simulator provides immediate feedback to users as they maneuver levers that affect the economy, energy systems, and climate. Participants begin with the pathway the world is on now, which is projected to lead to more than 3 degrees Celsius of warming by 2100, which scientists predict will have catastrophic effects on the world.

A screen capture of the En-ROADS simulator.

See the full article here .

Comments are invited and will be appreciated, especially if the reader finds any errors which I can correct.

five-ways-keep-your-child-safe-school-shootings

Please help promote STEM in your local schools.

The MIT Sloan School of Management is the business school of the Massachusetts Institute of Technology, a private university in Cambridge, Massachusetts. MIT Sloan offers bachelor’s, master’s, and doctoral degree programs, as well as executive education. Its degree programs are among the most selective in the world. MIT Sloan emphasizes innovation in practice and research. Many influential ideas in management and finance originated at the school, including the Black–Scholes model, the Solow–Swan model, the random walk hypothesis, the binomial options pricing model, and the field of system dynamics. The faculty has included numerous Nobel laureates in economics and John Bates Clark Medal winners.

The MIT Sloan School of Management began in 1914 as the engineering administration curriculum (“Course 15”) in the MIT Department of Economics and Statistics. The scope and depth of this educational focus grew steadily in response to advances in the theory and practice of management.

A program offering a master’s degree in management was established in 1925. The world’s first university-based mid-career education program—the Sloan Fellows program—was created in 1931 under the sponsorship of Alfred P. Sloan, himself an 1895 MIT graduate, who was the chief executive officer of General Motors and has since been credited with creating the modern corporation. An Alfred P. Sloan Foundation grant established the MIT School of Industrial Management in 1952 with the charge of educating the “ideal manager”. In 1964, the school was renamed in Sloan’s honor as the Alfred P. Sloan School of Management. In the following decades, the school grew to the point that in 2000, management became the second-largest undergraduate major at MIT. In 2005, an undergraduate minor in management was opened to 100 students each year. In 2014, the school celebrated 100 years of management education at MIT.

Since its founding, the school has initiated many international efforts to improve regional economies and positively shape the future of global business. In the 1960s, the school played a leading role in founding the first Indian Institute of Management. Other initiatives include the MIT-China Management Education Project, the International Faculty Fellows Program, and partnerships with IESE Business School in Spain, Sungkyunkwan University [ 성균관대학교](KR), NOVA University Lisbon [Universidade NOVA de Lisboa](PT), The SKOLKOVO School of Management [Moskovskaya Shkola Upravleniya Skolkovo](RU), and The Tsinghua University [清华大学](CN). In 2014, the school launched the MIT Regional Entrepreneurship Acceleration Program (REAP), which brings leaders from developing regions to MIT for two years to improve their economies. In 2015, MIT worked in collaboration with the Central Bank of Malaysia to establish The Asia School of Business MBA Malaysia [亞洲商學院](MY).

The Massachusetts Institute of Technology is a private land-grant research university in Cambridge, Massachusetts. The institute has an urban campus that extends more than a mile (1.6 km) alongside the Charles River. The institute also encompasses a number of major off-campus facilities such as the MIT Lincoln Laboratory , the MIT Bates Research and Engineering Center , and the Haystack Observatory , as well as affiliated laboratories such as the Broad Institute of MIT and Harvard and Whitehead Institute.

Founded in 1861 in response to the increasing industrialization of the United States, Massachusetts Institute of Technology adopted a European polytechnic university model and stressed laboratory instruction in applied science and engineering. It has since played a key role in the development of many aspects of modern science, engineering, mathematics, and technology, and is widely known for its innovation and academic strength. It is frequently regarded as one of the most prestigious universities in the world.

Nobel laureates, Turing Award winners, and Fields Medalists have been affiliated with MIT as alumni, faculty members, or researchers. In addition, National Medal of Science recipients, National Medals of Technology and Innovation recipients, MacArthur Fellows, Marshall Scholars, Mitchell Scholars, Schwarzman Scholars, astronauts, and Chief Scientists of the U.S. Air Force have been affiliated with The Massachusetts Institute of Technology. The university also has a strong entrepreneurial culture and MIT alumni have founded or co-founded many notable companies. Massachusetts Institute of Technology is a member of the Association of American Universities.

Foundation and vision

In 1859, a proposal was submitted to the Massachusetts General Court to use newly filled lands in Back Bay, Boston for a “Conservatory of Art and Science”, but the proposal failed. A charter for the incorporation of the Massachusetts Institute of Technology, proposed by William Barton Rogers, was signed by John Albion Andrew, the governor of Massachusetts, on April 10, 1861.

Rogers, a professor from the University of Virginia , wanted to establish an institution to address rapid scientific and technological advances. He did not wish to found a professional school, but a combination with elements of both professional and liberal education, proposing that:

“The true and only practicable object of a polytechnic school is, as I conceive, the teaching, not of the minute details and manipulations of the arts, which can be done only in the workshop, but the inculcation of those scientific principles which form the basis and explanation of them, and along with this, a full and methodical review of all their leading processes and operations in connection with physical laws.”

The Rogers Plan reflected the German research university model, emphasizing an independent faculty engaged in research, as well as instruction oriented around seminars and laboratories.

Early developments

Two days after The Massachusetts Institute of Technology was chartered, the first battle of the Civil War broke out. After a long delay through the war years, MIT’s first classes were held in the Mercantile Building in Boston in 1865. The new institute was founded as part of the Morrill Land-Grant Colleges Act to fund institutions “to promote the liberal and practical education of the industrial classes” and was a land-grant school. In 1863 under the same act, the Commonwealth of Massachusetts founded the Massachusetts Agricultural College, which developed as the University of Massachusetts Amherst ). In 1866, the proceeds from land sales went toward new buildings in the Back Bay.

The Massachusetts Institute of Technology was informally called “Boston Tech”. The institute adopted the European polytechnic university model and emphasized laboratory instruction from an early date. Despite chronic financial problems, the institute saw growth in the last two decades of the 19th century under President Francis Amasa Walker. Programs in electrical, chemical, marine, and sanitary engineering were introduced, new buildings were built, and the size of the student body increased to more than one thousand.

The curriculum drifted to a vocational emphasis, with less focus on theoretical science. The fledgling school still suffered from chronic financial shortages which diverted the attention of the MIT leadership. During these “Boston Tech” years, Massachusetts Institute of Technology faculty and alumni rebuffed Harvard University president (and former MIT faculty) Charles W. Eliot’s repeated attempts to merge MIT with Harvard College’s Lawrence Scientific School. There would be at least six attempts to absorb MIT into Harvard. In its cramped Back Bay location, MIT could not afford to expand its overcrowded facilities, driving a desperate search for a new campus and funding. Eventually, the MIT Corporation approved a formal agreement to merge with Harvard, over the vehement objections of MIT faculty, students, and alumni. However, a 1917 decision by the Massachusetts Supreme Judicial Court effectively put an end to the merger scheme.

In 1916, The Massachusetts Institute of Technology administration and the MIT charter crossed the Charles River on the ceremonial barge Bucentaur built for the occasion, to signify MIT’s move to a spacious new campus largely consisting of filled land on a one-mile-long (1.6 km) tract along the Cambridge side of the Charles River. The neoclassical “New Technology” campus was designed by William W. Bosworth and had been funded largely by anonymous donations from a mysterious “Mr. Smith”, starting in 1912. In January 1920, the donor was revealed to be the industrialist George Eastman of Rochester, New York, who had invented methods of film production and processing, and founded Eastman Kodak. Between 1912 and 1920, Eastman donated $20 million ($236.6 million in 2015 dollars) in cash and Kodak stock to MIT.

Curricular reforms

In the 1930s, President Karl Taylor Compton and Vice-President (effectively Provost) Vannevar Bush emphasized the importance of pure sciences like physics and chemistry and reduced the vocational practice required in shops and drafting studios. The Compton reforms “renewed confidence in the ability of the Institute to develop leadership in science as well as in engineering”. Unlike Ivy League schools, Massachusetts Institute of Technology catered more to middle-class families, and depended more on tuition than on endowments or grants for its funding. The school was elected to the Association of American Universities in 1934.

Still, as late as 1949, the Lewis Committee lamented in its report on the state of education at The Massachusetts Institute of Technology that “the Institute is widely conceived as basically a vocational school”, a “partly unjustified” perception the committee sought to change. The report comprehensively reviewed the undergraduate curriculum, recommended offering a broader education, and warned against letting engineering and government-sponsored research detract from the sciences and humanities. The School of Humanities Arts and Social Sciences and the The Sloan School of Management were formed in 1950 to compete with the powerful Schools of Science and Engineering . Previously marginalized faculties in the areas of economics, management, political science, and linguistics emerged into cohesive and assertive departments by attracting respected professors and launching competitive graduate programs. The School of Humanities, Arts, and Social Sciences continued to develop under the successive terms of the more humanistically oriented presidents Howard W. Johnson and Jerome Wiesner between 1966 and 1980.

The Massachusetts Institute of Technology‘s involvement in military science surged during World War II. In 1941, Vannevar Bush was appointed head of the federal Office of Scientific Research and Development and directed funding to only a select group of universities, including MIT. Engineers and scientists from across the country gathered at Massachusetts Institute of Technology ‘s Radiation Laboratory, established in 1940 to assist the British military in developing microwave radar. The work done there significantly affected both the war and subsequent research in the area. Other defense projects included gyroscope-based and other complex control systems for gunsight, bombsight, and inertial navigation under Charles Stark Draper’s Instrumentation Laboratory; the development of a digital computer for flight simulations under Project Whirlwind; and high-speed and high-altitude photography under Harold Edgerton. By the end of the war, The Massachusetts Institute of Technology became the nation’s largest wartime R&D contractor (attracting some criticism of Bush), employing nearly 4000 in the Radiation Laboratory alone and receiving in excess of $100 million ($1.2 billion in 2015 dollars) before 1946. Work on defense projects continued even after then. Post-war government-sponsored research at MIT included SAGE and guidance systems for ballistic missiles and Project Apollo.

These activities affected The Massachusetts Institute of Technology profoundly. A 1949 report noted the lack of “any great slackening in the pace of life at the Institute” to match the return to peacetime, remembering the “academic tranquility of the prewar years”, though acknowledging the significant contributions of military research to the increased emphasis on graduate education and rapid growth of personnel and facilities. The faculty doubled and the graduate student body quintupled during the terms of Karl Taylor Compton, president of The Massachusetts Institute of Technology between 1930 and 1948; James Rhyne Killian, president from 1948 to 1957; and Julius Adams Stratton, chancellor from 1952 to 1957, whose institution-building strategies shaped the expanding university. By the 1950s, The Massachusetts Institute of Technology no longer simply benefited the industries with which it had worked for three decades, and it had developed closer working relationships with new patrons, philanthropic foundations and the federal government.

In late 1960s and early 1970s, student and faculty activists protested against the Vietnam War and The Massachusetts Institute of Technology ‘s defense research. In this period Massachusetts Institute of Technology’s various departments were researching helicopters, smart bombs and counterinsurgency techniques for the war in Vietnam as well as guidance systems for nuclear missiles. The Union of Concerned Scientists was founded on March 4, 1969 during a meeting of faculty members and students seeking to shift the emphasis on military research toward environmental and social problems. The Massachusetts Institute of Technology ultimately divested itself from the Instrumentation Laboratory and moved all classified research off-campus to the MIT Lincoln Laboratory facility in 1973 in response to the protests. The student body, faculty, and administration remained comparatively unpolarized during what was a tumultuous time for many other universities. Johnson was seen to be highly successful in leading his institution to “greater strength and unity” after these times of turmoil. However, six Massachusetts Institute of Technology students were sentenced to prison terms at this time and some former student leaders, such as Michael Albert and George Katsiaficas, are still indignant about MIT’s role in military research and its suppression of these protests. (Richard Leacock’s film, November Actions, records some of these tumultuous events.)

In the 1980s, there was more controversy at The Massachusetts Institute of Technology over its involvement in SDI (space weaponry) and CBW (chemical and biological warfare) research. More recently, The Massachusetts Institute of Technology’s research for the military has included work on robots, drones and ‘battle suits’.

Recent history

The Massachusetts Institute of Technology has kept pace with and helped to advance the digital age. In addition to developing the predecessors to modern computing and networking technologies, students, staff, and faculty members at Project MAC, the Artificial Intelligence Laboratory, and the Tech Model Railroad Club wrote some of the earliest interactive computer video games like Spacewar! and created much of modern hacker slang and culture. Several major computer-related organizations have originated at MIT since the 1980s: Richard Stallman’s GNU Project and the subsequent Free Software Foundation were founded in the mid-1980s at the AI Lab; the MIT Media Lab was founded in 1985 by Nicholas Negroponte and Jerome Wiesner to promote research into novel uses of computer technology; the World Wide Web Consortium standards organization was founded at the Laboratory for Computer Science in 1994 by Tim Berners-Lee; the MIT OpenCourseWare project has made course materials for over 2,000 Massachusetts Institute of Technology classes available online free of charge since 2002; and the One Laptop per Child initiative to expand computer education and connectivity to children worldwide was launched in 2005.

The Massachusetts Institute of Technology was named a sea-grant college in 1976 to support its programs in oceanography and marine sciences and was named a space-grant college in 1989 to support its aeronautics and astronautics programs. Despite diminishing government financial support over the past quarter century, MIT launched several successful development campaigns to significantly expand the campus: new dormitories and athletics buildings on west campus; the Tang Center for Management Education; several buildings in the northeast corner of campus supporting research into biology, brain and cognitive sciences, genomics, biotechnology, and cancer research; and a number of new “backlot” buildings on Vassar Street including the Stata Center. Construction on campus in the 2000s included expansions of the Media Lab, the Sloan School’s eastern campus, and graduate residences in the northwest. In 2006, President Hockfield launched the MIT Energy Research Council to investigate the interdisciplinary challenges posed by increasing global energy consumption.

In 2001, inspired by the open source and open access movements, The Massachusetts Institute of Technology launched “OpenCourseWare” to make the lecture notes, problem sets, syllabi, exams, and lectures from the great majority of its courses available online for no charge, though without any formal accreditation for coursework completed. While the cost of supporting and hosting the project is high, OCW expanded in 2005 to include other universities as a part of the OpenCourseWare Consortium, which currently includes more than 250 academic institutions with content available in at least six languages. In 2011, The Massachusetts Institute of Technology announced it would offer formal certification (but not credits or degrees) to online participants completing coursework in its “MITx” program, for a modest fee. The “edX” online platform supporting MITx was initially developed in partnership with Harvard and its analogous “Harvardx” initiative. The courseware platform is open source, and other universities have already joined and added their own course content. In March 2009 the Massachusetts Institute of Technology faculty adopted an open-access policy to make its scholarship publicly accessible online.

The Massachusetts Institute of Technology has its own police force. Three days after the Boston Marathon bombing of April 2013, MIT Police patrol officer Sean Collier was fatally shot by the suspects Dzhokhar and Tamerlan Tsarnaev, setting off a violent manhunt that shut down the campus and much of the Boston metropolitan area for a day. One week later, Collier’s memorial service was attended by more than 10,000 people, in a ceremony hosted by the Massachusetts Institute of Technology community with thousands of police officers from the New England region and Canada. On November 25, 2013, The Massachusetts Institute of Technology announced the creation of the Collier Medal, to be awarded annually to “an individual or group that embodies the character and qualities that Officer Collier exhibited as a member of The Massachusetts Institute of Technology community and in all aspects of his life”. The announcement further stated that “Future recipients of the award will include those whose contributions exceed the boundaries of their profession, those who have contributed to building bridges across the community, and those who consistently and selflessly perform acts of kindness”.

In September 2017, the school announced the creation of an artificial intelligence research lab called the MIT-IBM Watson AI Lab. IBM will spend $240 million over the next decade, and the lab will be staffed by MIT and IBM scientists. In October 2018 MIT announced that it would open a new Schwarzman College of Computing dedicated to the study of artificial intelligence, named after lead donor and The Blackstone Group CEO Stephen Schwarzman. The focus of the new college is to study not just AI, but interdisciplinary AI education, and how AI can be used in fields as diverse as history and biology. The cost of buildings and new faculty for the new college is expected to be $1 billion upon completion.

The Caltech/MIT Advanced aLIGO was designed and constructed by a team of scientists from California Institute of Technology , Massachusetts Institute of Technology, and industrial contractors, and funded by the National Science Foundation .

It was designed to open the field of gravitational-wave astronomy through the detection of gravitational waves predicted by general relativity. Gravitational waves were detected for the first time by the LIGO detector in 2015. For contributions to the LIGO detector and the observation of gravitational waves, two Caltech physicists, Kip Thorne and Barry Barish, and Massachusetts Institute of Technology physicist Rainer Weiss won the Nobel Prize in physics in 2017. Weiss, who is also a Massachusetts Institute of Technology graduate, designed the laser interferometric technique, which served as the essential blueprint for the LIGO.

The mission of The Massachusetts Institute of Technology is to advance knowledge and educate students in science, technology, and other areas of scholarship that will best serve the nation and the world in the twenty-first century. We seek to develop in each member of The Massachusetts Institute of Technology community the ability and passion to work wisely, creatively, and effectively for the betterment of humankind.